Concept

AR can reveal hidden worlds around us. Often these worlds are charming fantasies. I think it’s more powerful to reveal the layers are already here, but go unnoticed. I am creating a web app that encourages groups of friends to explore parks in and around New York City. As a part of that, I have created a trail guide that provides directions through the park, as well as providing information on points of interest in the park. I think that AR is well suited to this guide because instead of looking at a picture in a book, on a printout, or on their phone hikers will be able to see the information situated directly on top of that they are seeing. I hope that this will be a very clear learning experience and that it will underline how much is hidden in the world around us that goes ignored.

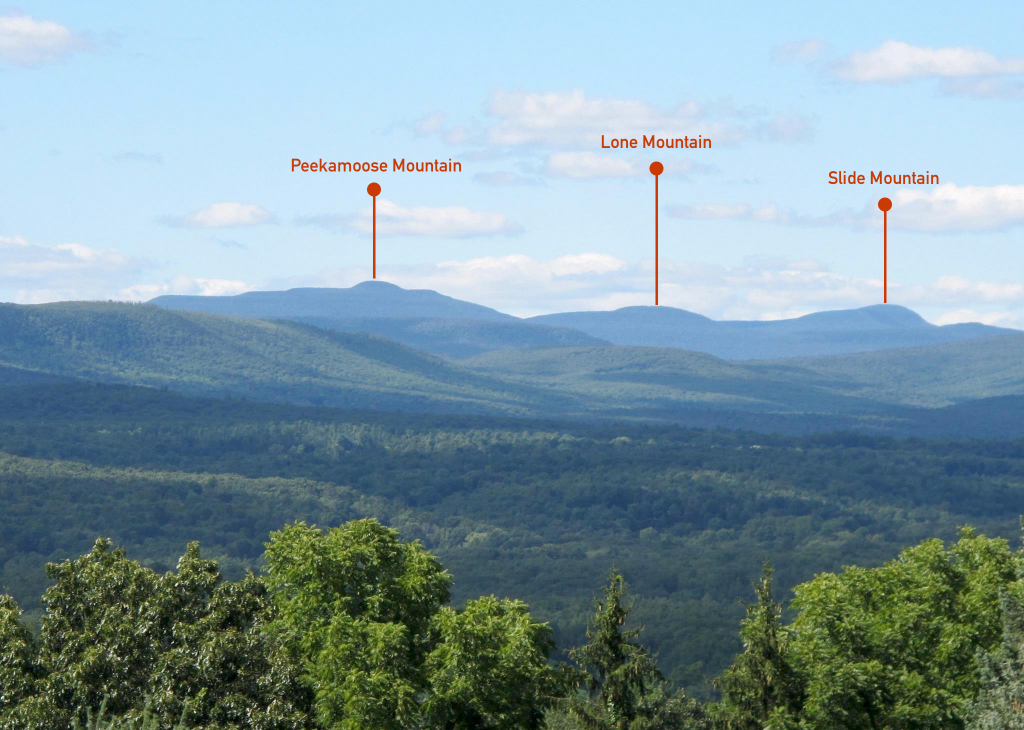

I selected Inwood Hill Park as my proof of concept location for this project. Inwood is a good ‘just right’ park for this project. It’s not too big, but not too small. Not too far away, but not as close as Central Park. It’s not a manicured park, but it’s not so wild that it would make first time adventurers uncomfortable. Also, it is a very natural park. It has relatively little landscaping in its wild areas, most of its features were designed by nature not Frederick Law Olmsted.

I had done some previous research about features and paths in the park, but work for this project started with an in-person visit to the park. I wanted to make sure that my path made sense and to identify places where AR elements would make sense. I identified three points for possible AR experiences: the shorakkopoch rock (it marks the supposed site where the Dutch bought the island from the Lenape), a glacial pothole, and whale rock.

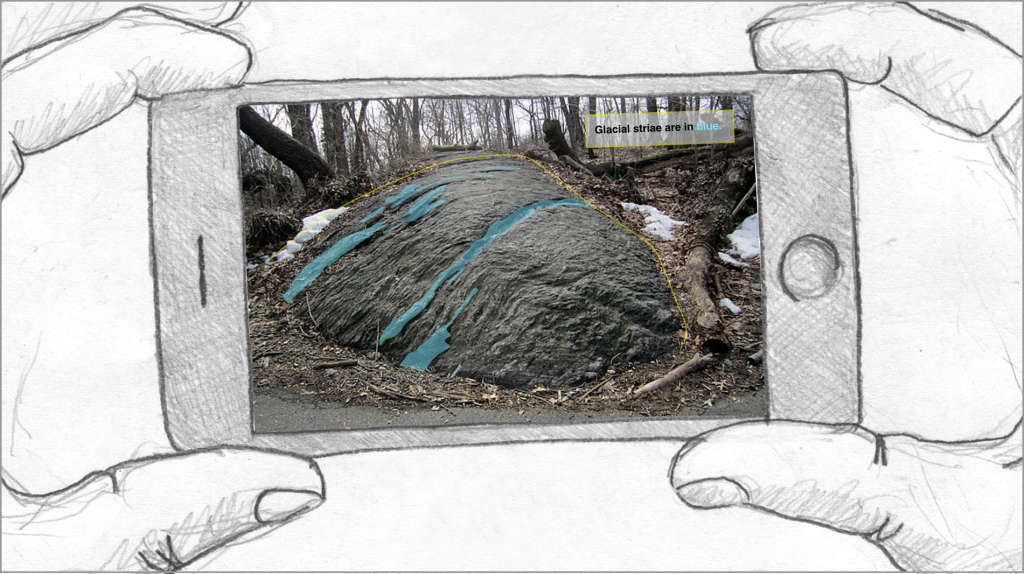

Whale rock seemed like the simplest site to augment, so I started with that. The rock has several deep groves in it created by glacial movement. I wanted to create a way to highlight these glacial striae.

I imagine a simple overlay that would show where the visitor would be looking. Unlike other AR experiences, these moments should not be immersive and time consuming. The main point of the project is to get people out into nature, not to have them looking at their phones the whole time. These short digestible moments will give people an ‘A ha!’ moment, and then be over.

Build

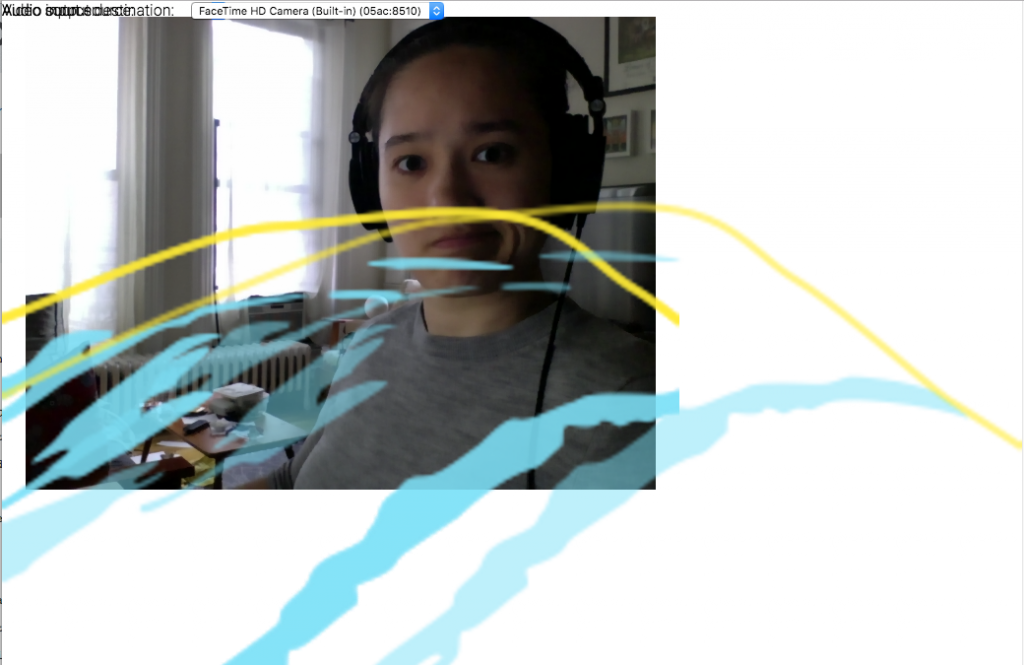

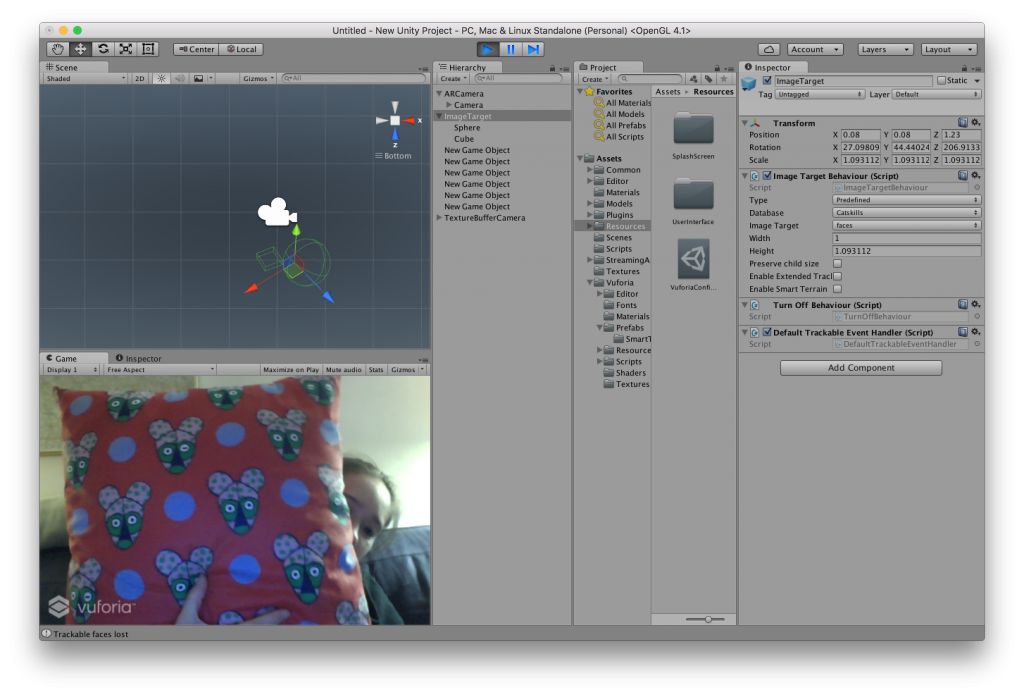

I elected to build this project with the Motion Stack library. This would allow me to keep these moments simple and in the browser, but still give me access to the sensors on the phone. The Orientation Cube and the RelativeHeading Image Panning functions both seemed particularly relevant. It was easy to build a working demo from the example code. I also built a short demo from a tutorial on accessing the phone camera from the browser.

However, the camera demo did not work well on mobile. I was unable to access the rear camera. After some frustrating debugging I was able to find two problems 1) Accessing cameras from the browser does not work well on older hardware, and even slightly older versions of Chrome. This effected me pretty significantly, because I’m an iPhone user and the phones I was testing with were older hand me downs. 2) The example code I was following on MDN did not seem to work. Adding a constraint to use the rear camera broke without giving any errors in the console.

Using MediaDevices.enumerateDevices() and then selecting the camera was successful. I built based on an example here. Now that I had both elements of the experience working, I could start combining them. That produced three bugs to be fixed. 1) The pano/overlay image covers the whole viewport, so it’s impossible to select the rear camera. This is the most fixable bug, but also the most frustrating because I had just spent so much time getting the camera to work. 2) The pano/overlay image is doubled for some reason. 3) If I remove the audio selectors from the HTML the video fails to load.

Next Steps

- Fix the bugs. They all seem fixable, but annoying.

- Get HTTPS site. I currently don’t have one setup, so I can’t host code that uses the webcam until I do.

- Work on the UX for the AR experiences specifically. When do I need to explain what glacial striae are? Is there an intermediate screen before the AR? What should be there?

- Test with an object. I can’t go to the park every day to test, so building a mockup of some kind to refine the interaction with seems like a good plan.

- User test. I need to make sure that this makes sense to other people too.